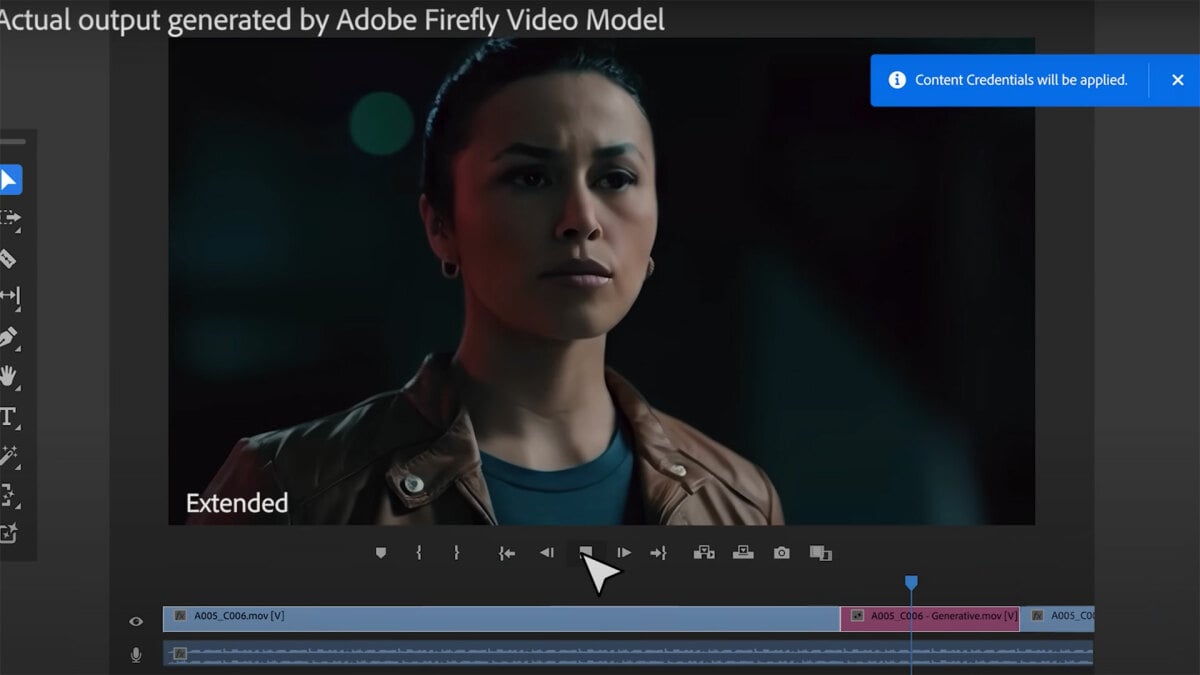

The video stresses that “content credentials” will “always make transparent whether AI was used”

Should absolutely be legally required for all commercial and political usage in these hyper-propagandized times IMO…

Needlessly dangerous. The only positive outcome would be to make people aware of what is possible. The danger is that non-marked media will appear more credible.

Just assume everrything is AI/deepfake and then go back to the real world to remember that AI robots are just around the corner

I for one welcome our new robot overlords and will turn on humanity in exchange for not having to work and being able to live a comfortable lifestyle.

Thats the idea, but probably they’ll just cull the proletariat until homeostasis is achieved

deleted by creator

Definitely

Any article with a headline like that is at least suspicious but most likely fucking garbage. AI Boogeyman rage/fear bait is so base and pandering.

The article: Adobe did a thing. It was AI. AI looks real. Fin.

Are those two paragraphs the entire article? Or is there more buried under the ads?

that’s it, just a half baked FUD piece

Not entirely sure what the problem is here.

Not only could it lead to thousands of jobs being cut (it takes more than just actors to make a movie) it also makes it dead simple to put real people into a video that shows them doing something illegal. Grainy security cam LoRA anyone?

As for manipulated videos as legal evidence, if these products push for authenticity measures of security footage at the hardware/capture level, that’s a good thing. Adobe is just commoditizing what’s already been possible for some time now.

If trends continue, open-source solutions will be at this level within a year if not months. At that point you’re free to “watermark” the content or not.

The jobs will be replaced by the Indie companies that this tech will help foster. It was fantasy a few years ago to put out quality products that could rival Hollywood or triple A game companies. That gap is quickly being bridged.

“New thing bad”

Okay I guess.

Not bad, but scawy

Meh, video editing has been wild for a couple of years, this is the logical next step

Can we take a minute and stop to assess where Adobe is obtaining its training data? Everyone is all up in arms about the OpenAI devs scraping DA and such, but Adobe is 100% training on the entirety of Behance and the Adobe Cloud. Things that are not public, our personal files that we never intended others to be seen. Our private albums of our children, or our wives/husbands/partners, or parts of NDA restricted projects that are stored in Adobe Cloud automatically that are supposedly not in violation of our NDAs.

Where are the pitchforks? Where is the outrage? This is 1000x worse than some desperate AI engineer staring at a publicly visible and available training set that is already tagged and described in detail that was begging to be used. People lost their shit over that one. Why does Adobe get a pass?

Aren’t they training it exclusively from their own data sets which presumably they already own the licenses for?

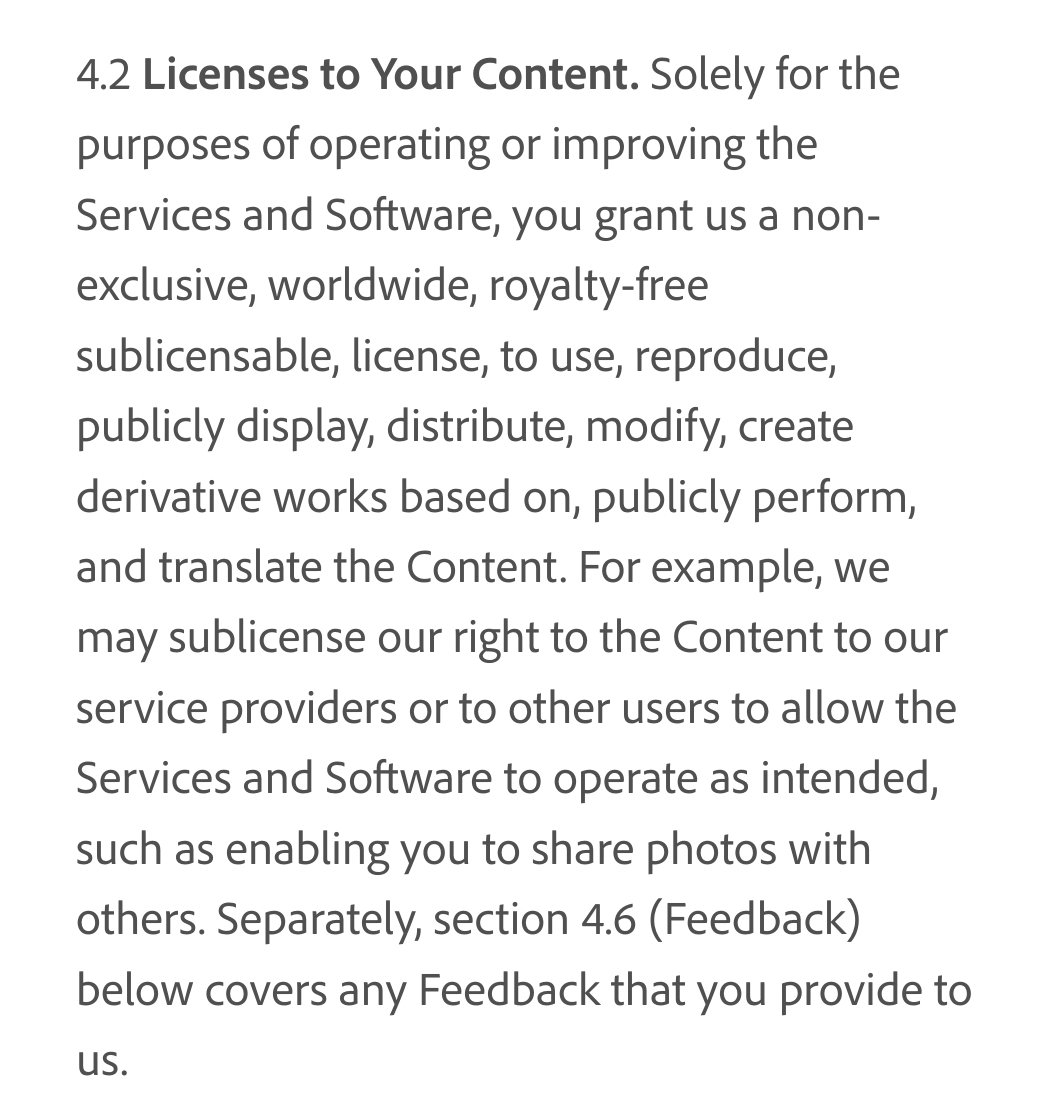

That little “derivative works” bit in the middle gives them license to use the files stored in Creative Cloud to train AIs. So yes, they are using their data sets that they have license for. It just happens to be our data that they took the license on and we paid them to do it.

Quell the outrage.

They are paying for it. Here’s a quick link from the top of search.

https://qz.com/adobe-ai-training-data-artists-pay-1851407658

That’s fun, glad to see they are paying people now. I didn’t see in there when in the multi-years long process it takes to develop tool-sets and train checkpoints they paid for the rights to create derivative works. The article is dated a few days ago and it is present tense. They are NOW paying. The AI is trained. The tool is built. It takes tens of thousands of images to train a generative model from scratch, I would expect decades of footage for a video model. So if the model is trained, and them paying is new…?

Also, they don’t have to ask, or pay… They already have the rights for all content stored in Creative Cloud (EULA Link).

Legally, an AI training is a “derivative work”, so I would need a letter from the lead engineers on the AI dev team at Adobe, signed by every dev who has worked on it, stating that they only used paid training material at every stage of development of the tools, disseminated separately from any official Adobe channel before I would believe that the greedy gaping maw that is Adobe did not just use the millions of images and thousands of years of footage they have legal right to use that THEY are actually PAID for. They know they can pay now because it is a drop in the bucket compared to the Creative Cloud fees and is great PR and an even better smokescreen. There is precisely 0 chance they are going to receive enough good, usable footage through this program to train an AI from scratch.

“Absolutely Terrifying”

Really? Did he watch the same video I saw on that page? That b-roll was really bad.

I get that it’ll only get better, but generative AI models will never understand filmmaking techniques because it can’t. That’s not how these models are built.

It can create a city skyline and pan right, but it’ll never know why a pan right in that scene was appropriate or how the lighting fits in with the rest of the scene. It’ll never come up with new ways of “filming” a scene, because it’s all built on what already exists. There’s no style to generative AI.

Yes. It is a new tool for vfx artists and not a replacement. If they can deliver higher quality for less money, you’d expect them to be more in demand.

“Never” is a big word, but it’s really not clear how one would train an AI to know what it should generate. See the hubbub about diversity in google’s image generator. I see no theoretical problems, but in practice it’s just not going to happen any time soon.

With the Pro version you can create generative AI film in color. With the Legibull feature you’re allowed to display text on the screen of up to 10 frames in your video.