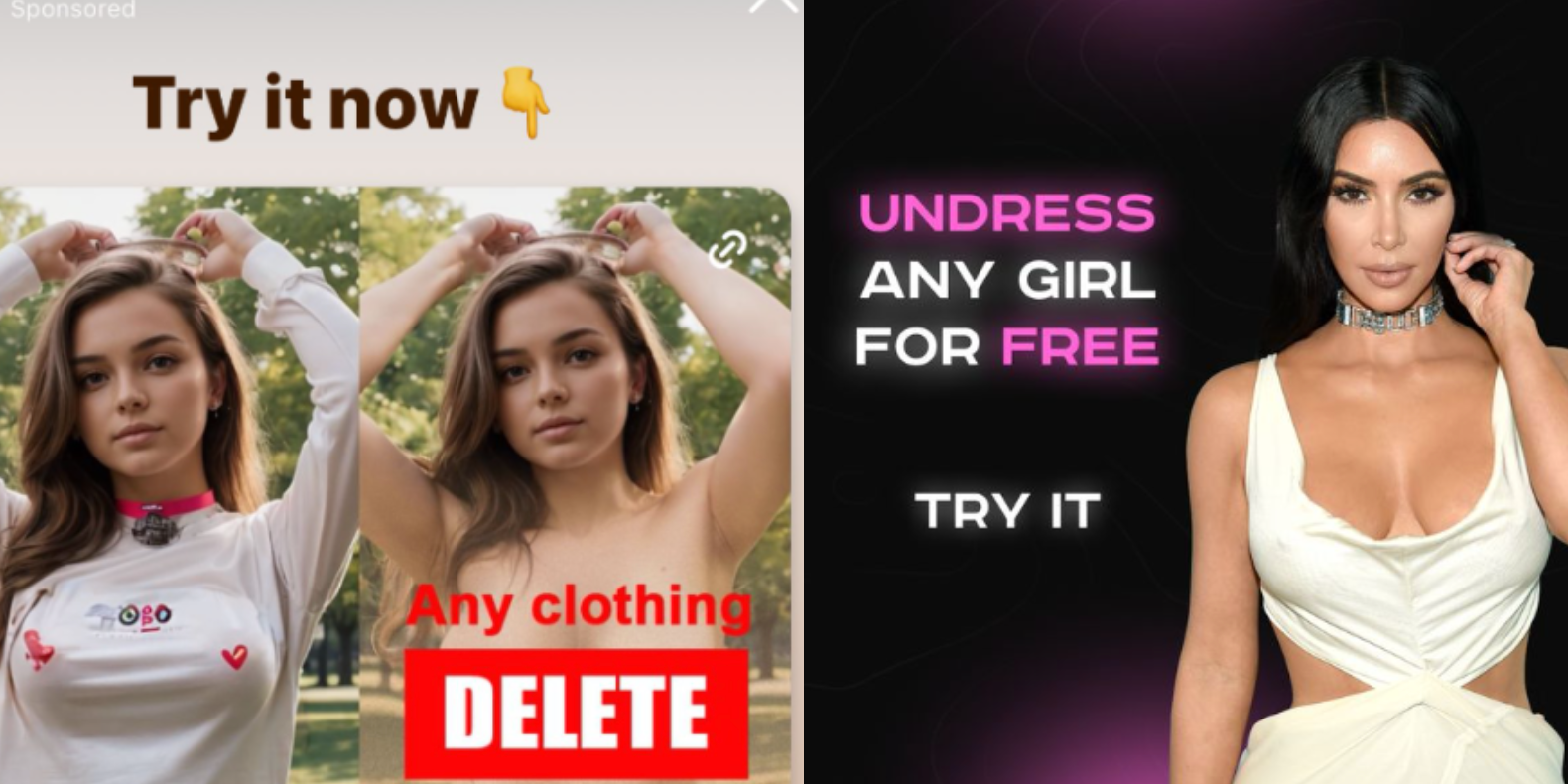

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

It’s all so incredibly gross. Using “AI” to undress someone you know is extremely fucked up. Please don’t do that.

I’m going to undress Nobody. And give them sexy tentacles.

Behold my meaty, majestic tentacles. This better not awaken anything in me…

Same vein as “you should not mentally undress the girl you fancy”. It’s just a support for that. Not that i have used it.

Don’t just upload someone else’s image without consent, though. That’s even illegal in most of europe.

Would it be any different if you learn how to sketch or photoshop and do it yourself?

You say that as if photoshopping someone naked isnt fucking creepy as well.

Creepy to you, sure. But let me add this:

Should it be illegal? No, and good luck enforcing that.

You’re at least right on the enforcement part, but I dont think the illegality of it should be as hard of a no as you think it is

This is also fucking creepy. Don’t do this.

I am not saying anyone should do it and don’t need some internet stranger to police me thankyouverymuch.

Yet another example of multi billion dollar companies that don’t curate their content because it’s too hard and expensive. Well too bad maybe you only profit 46 billion instead of 55 billion. Boo hoo.

It’s not that it’s too expensive, it’s that they don’t care. They won’t do the right thing until and unless they are forced to, or it affects their bottom line.

Wild that since the rise of the internet it’s like they decided advertising laws don’t apply anymore.

But Copyright though, it absolutely does, always and everywhere.

Shouldn’t AI be good at detecting and flagging ads like these?

“Shouldn’t AI be good” nah.

Build an AI that will flag immoral ads and potentially lose you revenue

Build an AI to say you’re using AI to moderate ads but it somehow misses the most profitable bad actors

Which do you think Meta is doing?

Your example is 9 billion difference. This would not cost 9 billion. It wouldn’t even cost 1 billion.

Well too bad maybe you only profit 46 billion instead of 55 billion.

I can’t possibly imagine this quality of clickbait is bringing in $9B annually.

Maybe I’m wrong. But this feels like the sort of thing a business does when its trying to juice the same lemon for the fourth or fifth time.

It’s not that the clickbait is bringing in $9B, it’s that it would cost $9B to moderate it.

Good, let all celebs come together and sue zuck into the ground

Its funny how many people leapt to the defense of Title V of the Telecommunications Act of 1996 Section 230 liability protection, as this helps shield social media firms from assuming liability for shit like this.

Sort of the Heads-I-Win / Tails-You-Lose nature of modern business-friendly legislation and courts.

AI gives creative license to anyone who can communicate their desires well enough. Every great advancement in the media age has been pushed in one way or another with porn, so why would this be different?

I think if a person wants visual “material,” so be it. They’re doing it with their imagination anyway.

Now, generating fake media of someone for profit or malice, that should get punishment. There’s going to be a lot of news cycles with some creative perversion and horrible outcomes intertwined.

I’m just hoping I can communicate the danger of some of the social media platforms to my children well enough. That’s where the most damage is done with the kind of stuff.

The porn industry is, in fact, extremely hostile to AI image generation. How can anyone make money off porn if users simply create their own?

Also I wouldn’t be surprised if the it’s false advertising and in clicking the ad will in fact just take you to a webpage with more ads, and a link from there to more ads, and more ads, and so on until eventually users either give up (and hopefully click on an ad).

Whatever’s going on, the ad is clearly a violation of instagram’s advertising terms.

I’m just hoping I can communicate the danger of some of the social media platforms to my children well enough. That’s where the most damage is done with the kind of stuff.

It’s just not your children you need to communicate it to. It’s all the other children they interact with. For example I know a young girl (not even a teenager yet) who is being bullied on social media lately - the fact she doesn’t use social media herself doesn’t stop other people from saying nasty things about her in public (and who knows, maybe they’re even sharing AI generated CSAM based on photos they’ve taken of her at school).

youtube has been for like 6 or 7 months. even with famous people in the ads. I remember one for a while with Ortega

Ortega? The taco sauce?

NSFW-ish

I sat down with tacos as I opened up that reply.

Witch.

I guess that’s an OERGAsm

Don’t use that as lube.

So many of these comments are breaking down into arguments of basic consent for pics, and knowing how so many people are, I sure wonder how many of those same people post pics of their kids on social media constantly and don’t see the inconsistency.

ITT: A bunch of creepy fuckers who dont think society should judge them for being fucking creepy

Isn’t it kinda funny that the “most harmful applications of AI tools are not hidden on the dark corners of the internet,” yet this article is locked behind a paywall?

The proximity of these two phrases meaning entirely opposite things indicates that this article, when interpreted as an amorphous cloud of words without syntax or grammar, is total nonsense.

The arrogant bastards!

That bidding model for ads should be illegal. Alternatively, companies displaying them should be responsible/be able to tell where it came from. Misinformarion has become a real problem, especially in politics.

Capitalism works! It breeds innovation like this! good luck getting non consensual ai porn in your socialist government

It’s ironic because the “free market” part of capitalism is defined by consent. Capitalism is literally “the form of economic cooperation where consent is required before goods and money change hands”.

Unfortunately, it only refers to the two primary parties to a transaction, ignoring anyone affected by externalities to the deal.

the above comment was written by a person who’s lack of understanding of consent suggests they are almost certainly guilty of sex crimes.

This is not okay, but this is nowhere near the most harmful application of AI.

The most harmful application of AI that I can think of would disrupting a country’s entire culture via gaslighting social media bots, leading to increases in addiction, hatred, suicide, and murder.

Putting hundreds of millions of people into a state of hopeless depression would be more harmful than creating a picture of a naked woman with a real woman’s face on it.

I don’t want to fall into a slippery slope argument, but I really see this as the tip of a horrible iceberg. Seeing women as sexual objects starts with this kind of non consensual media, but also includes non consensual approaches (like a man that thinks he can subtly touch women in full public transport and excuse himself with the lack of space), sexual harassment, sexual abuse, forced prostitution (it’s hard to know for sure, but possibly the majority of prostitution), human trafficking (in which 75%-79% go into forced prostitution, which causes that human trafficking is mostly done to women), and even other forms of violence, torture, murder, etc.

Thus, women live their lives in fear (in varying degrees depending on their country and circumstances). They are restricted in many ways. All of this even in first world countries. For example, homeless women fearing going to shelters because of the situation with SA and trafficking that exists there; women retiring from or not entering jobs (military, scientific exploration, etc.) because of their hostile sexual environment; being alert and often scared when alone because they can be targets, etc. I hopefully don’t need to explain the situation in third world countries, just look at what’s legal and imagine from there…

This is a reality, one that is:

Putting hundreds of millions of people into a state of hopeless depression

Again, I want to be very clear, I’m not equating these tools to the horrible things I mentioned. I’m saying that it is part of the same problem in a lighter presentation. It is the tip of the iceberg. It is a symptom of a systemic and cultural problem. The AI by itself may be less catastrophic in consequences, rarely leading to permanent damage (I can only see it being the case if the victim develops chronic or pervasive health problems by the stress of the situation, like social anxiety, or commits suicide). It is still important to acknowledge the whole machinery so we can dimension what we are facing, and to really face it because something must change. The first steps might be against this “on the surface” “not very harmful” forms of sexual violence.

Am I the only one who doesn’t care about this?

Photoshop has existed for some time now, so creating fake nudes just became easier.

Also why would you care if someone jerks off to a photo you uploaded, regardless of potential nude edits. They can also just imagine you naked.

If you don’t want people to jerk off to your photos, don’t upload any. It happens with and without these apps.

But Instagram selling apps for it is kinda fucked, since it’s very anti-porn, but then sells apps for it (to children).

I think it’s clear you have never experienced being sexualized when you weren’t okay with it. It’s a pretty upsetting experience that can feel pretty violating. And as most guys rarely if ever experience being sexualized, never mind when they don’t want to be, I’m not surprised people might be unable to emphasize

Having experienced being sexualized when I wasn’t comfortable with it, this kind of thing makes me kinda sick to be honest. People are used to having a reasonable expectation that posting safe for work pictures online isn’t inviting being sexualized. And that it would almost never be turned into pornographic material featuring their likeness, whether it was previously possible with Photoshop or not.

It’s not surprising people would find the loss of that reasonable assumption discomforting given how uncomfortable it is to be sexualized when you don’t want to be. How uncomfortable a thought it is that you can just be going about your life and minding your own business, and it will now be convenient and easy to produce realistic porn featuring your likeness, at will, with no need for uncommon skills not everyone has

Interesting (wrong) assumption there buddy.

But why would I care how people think of me? If it influences their actions, we gonna start to have problems, tho.

Fair enough, I’m sorry for making assumptions about you.

I do think my points stand though

Something that can also happen: require Facebook login with some excuse, then blackmail the creeps by telling “pay us this extortion or we’re going to send proof of your creepiness to your contacts”

Another something that can also happen: require facebook login with some excuse, plant shit on your enemy’s computer, then blackmail them by threatening to frame them as creeps.

Sometimes the reason a method is frowned upon is that it is equally usable for evil as for good.

Plz don look at my digital pp, it make me sad… Maybe others have different feeds but the IG ad feed I know and love promotes counterfeit USD, MDMA, mushrooms, mail order brides, MLM schemes, gun building kits and all kinds of cool shit (all scams through telegram)…so why would they care about an AI image generator that puts digital nipples and cocks on people. Does that mean I can put a cock on Hilary and bobs/vagenes on trump? asking for a friend.

Can you link some of these nudificator apps? Just for research 🤭

Go on Instagram. Like four photos of girls in bikinis. The ad tool will figure out what you want shortly.

I want to put photos of non-humans in there genuinely. I wonder what it would do to a photo of a brick or a giraffe.

New confused boners community me thinks.

Or the furrys get a little weirder

Nah it won’t do that because the ai is human-only. Unless it has some secret beastiality DLC I don’t know about.